The processing of the images is done in Agisoft Metashape. This software makes use of the technique ‘structure from motion’. Structure from motion works by combining photogrammetry and visual analyzation techniques of computers in order to assess from what angle pictures were taken and thus being able to recreate the object in the photograph in 3D. This is achieved by the software that looks for pixels that are recurring in multiple photos (Papadopoulos, n.d.). These common points form a point-cloud that can be turned into a three-dimensional mesh. After creating the mesh, texture that is captured from the photos is laid over it, resulting in a complete 3D model (Papadopoulos, n.d.).

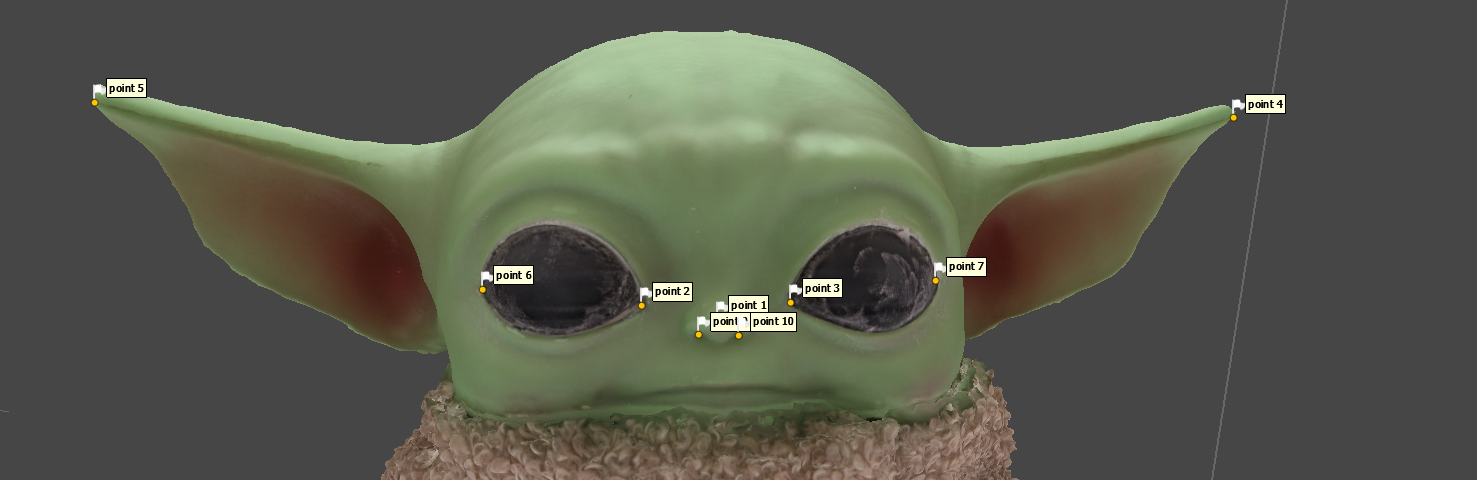

During our project we did this recreation process on two separate occasions, once with the model upright and another time upside down. We did this in order to capture the bottom of the plush which is otherwise not visible. Therefore, this resulted in two models that had to be merged together. In metashape, there are two methods to do this, point-based alignment and marker-based alignment. We first attempted point-based in which the software aligns the models based on points that it selects by itself. However, this did not produce a desired outcome. In contrast, marker-based alignment worked exceptionally well. By manually selecting points on both models that are ‘unique’ the alignment was successful.

The completed model features only the head of the upright model (since this one was of superior quality) and the bottom of the upside down version since this included the bottom of the toy. In addition to this, masks were also created using Metashape. These masks were made by outlining the object in every photo and then applying them to the models. This way, features like the background were removed more thoroughly. Despite making the masks, we found that it was more convenient and efficient to edit the model itself using the ‘erase/flatten’ tools of Metashape.